Creating a C2 using blob.core.windows.net

In November 2023, I presented at CHCON "Go by Example: Creating a C2 framework while trolling Microsoft". The recording is available on YouTube if you'd prefer to watch. I've written the core points of that presentation into this post.

Background

If you've ever talked to me about smart homes and automation or read some of my other blog posts, you’ll have seen that I have a fairly complex internal network architecture.

(I have waaaayyyyyy too many computers, and no time to manage them all.)

Which is why in early 2023, I set myself a "simple" task: I want a unified management portal for my fleet of IoT, so that I don’t have to spend all my free time running around going sudo apt update && sudo apt upgrade -y into everything.

Specifically, I wanted:

- A single agent process that I could load onto all my devices

- A simple microservice backend to coordinate running tasks on the agents

- A simple cli tool that I could use to query and run commands at scale

I could have used any of the countless RMM tools available to do this, I had an idea of how to do it differently. Because, as the title of the talk implies, I had a bit of Microsoft trolling to do 😉.

The Design

In my day job I write a lot of Python, and am quite familiar with using Azure Functions, so my first thought was to simply stick to what I know.

But I've recently started reimplementing parts of my home automation stack using Go. Mostly because I enjoy learning new things, and while I'd played around with contributions to other Go projects, I wanted to understand a fully-worked Go example. But also because Go compiles into a neat fully-packaged binary, which is ideal for my use-cases, where I have task-specific software that runs on home automation devices; in addition to having lots of apt updates, I was also tired of python3 -m venv .env && source .env/bin/activate && pip install --upgrade pip && pip install --upgrade -r requirements.build.txt && pip freeze > requirements.txt.

And while I could have used Go to write both the agent and the backend microservice, I decided that the future is truely code-less architectures, and decided to build the server side using Azure Storage Blobs.

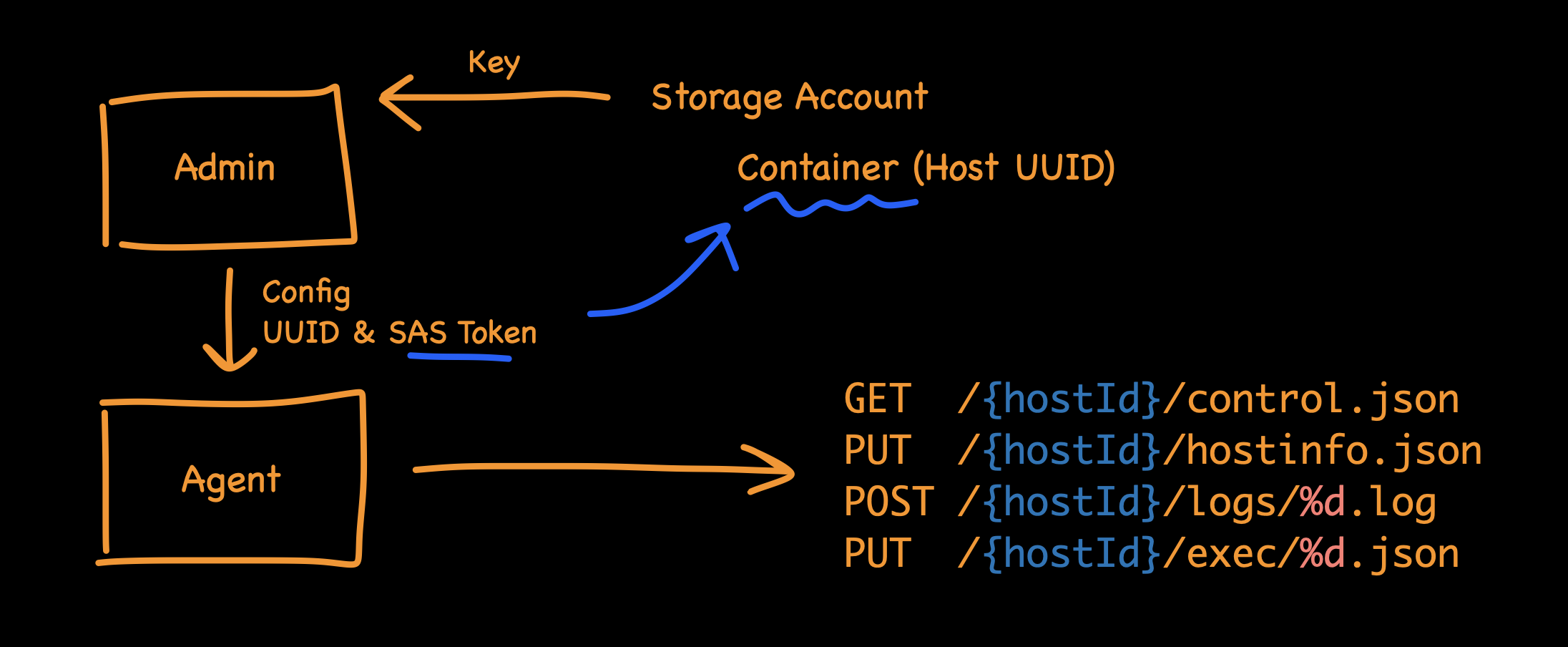

Which... isn't as crazy as you might first think. If you start by understanding the API design, the agent only needs a couple basic APIs:

- a PUT to report it's current status

- a GET to fetch instructions (like if it needs to run an update command)

- a PUT to upload results (e.g., if I want to fetch a file remotely)

- a POST to capture logs for diagnostics

All of these can be natively run as storage GET/PUT/POST operations. I can create a single Blob storage Container to hold all the agents, and use SAS tokens to segment each agent, so that a malicious agent can't interfere with others.

Using a Storage account as an API backend has numerous benefits, including:

- No-code, true server-less; nothing on the backend requires any maintenance whatsoever

- Cloud-native scalability, I can scale from 1 to 1bn devices instantly

- Cost effective, at ~$0.000004 (NZD) per operation!

- Super fast, no waiting for stopped applications to start

- No firewall rules to add

If you'd like to see it in action, go watch the YouTube video. No sorry, I haven't open-sourced the code. 🙂

No firewall rules to add?

No firewall rules to add, because you've already added them.

Remember that Microsoft trolling I promised?

See, Azure Blob Storage is so popular with Microsoft product teams, that just about every Microsoft product asks you to add *.blob.core.windows.net. Seriously, go look at the Microsoft documentation. Some highlights include:

- Office 365

- Windows Delivery Optimisation

- Entra Connect

- Defender for Endpoint, although at least they say: "Don't exclude the URL *.blob.core.windows.net from any kind of network inspection"

- Defender for Cloud Apps

- Visual Studio

- PowerApps

- Azure Backup

- Azure Site Recovery

Does this count as a vulnerability?

I've tried to raise this with Microsoft, including people I know who work there, and have always gotten a lukewarm response.

This is not exactly a new technique. There are documented examples of attackers using Azure services to host phishing sites. I even managed to find an example of someone who tried to report abuse to Microsoft, but gave up, and posted in the Learn forums in frustration. But as far as I can find, I'm the first to use Azure Storage as a RMM / C2.

The point is that each Azure Storage account should be treated like any other website, with all the typical controls that you would expect. Everyone should expect services that are available to anyone to be abused. In particularly risk-adverse organisations, this means accounts (domains) should be checked to see if they're newly seen, or are hosting content that contains brand impersonations.

But while this is a known issue, Microsoft product teams either aren't aware, or aren't taking steps to help reduce the impact Instructing IT teams to explicitly trust every Azure Storage account, by adding *.blob.core.windows.net to security bypass lists, is downright dangerous. Especially in situations where you're trying to create a strict outbound control list, like when your company is being hit by ransomware, and you're trying to cut off internet access while still allowing core tools (like EDR, and directory sync) to function.

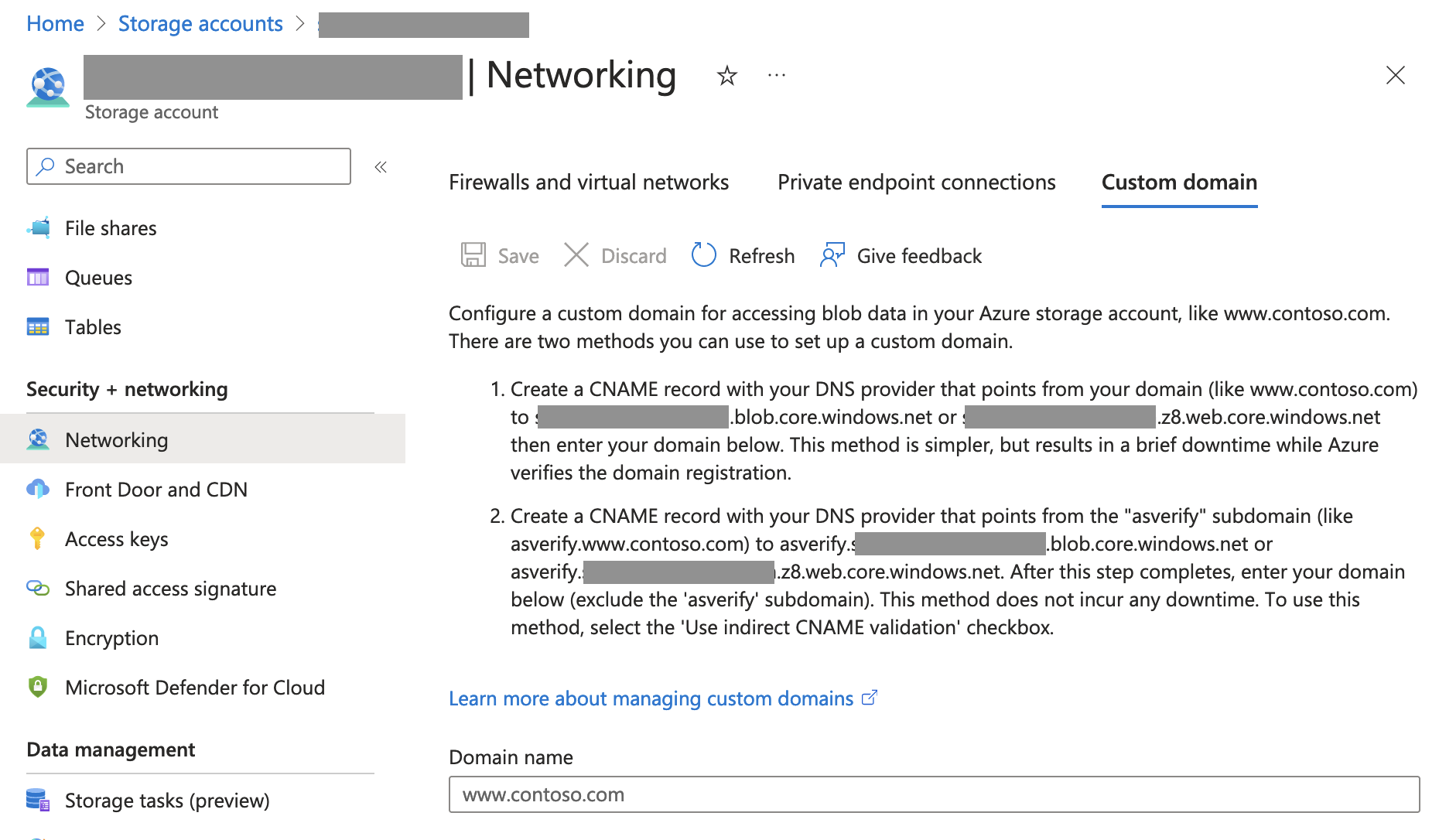

In my opinion, Microsoft need to do a stocktake of their official Azure Storage accounts, and at least put them behind a CNAME of a Microsoft-only domain like *.blob.cloud.microsoft. Storage Accounts support custom domains, and it's not even very difficult to do:

Epilogue: how many Microsoft official Azure Storage accounts are there?

Spoiler: I don't know.

I've been trying to find out though, and through observing network traffic I've found that there are at least 209 Storage accounts used for OneDrive for Business, 34 Storage accounts used for Defender for Endpoint.